The Problem: Drift, Not Rot

We usually treat data quality like a binary state—good or bad, clean or corrupt. But the real enemy isn’t corruption. It’s drift. Data doesn’t rot. It drifts. And if you don’t track how and when that drift happens, even the best system will eventually give you an answer that feels right but isn’t.

I saw this firsthand with a sentiment-based scorecarding tool I helped build. It used a combination of deterministic logic and generative AI to generate narratives from signals—intent to adopt streaming, willingness to invest, internal alignment. We fed it everything from recent architecture diagrams to SEC filings. Overall it helped provide us with insight into the business. But in one case, between the last scorecard run and the stakeholder meeting it was meant to prep for, a public statement dropped—clear evidence that the company had pivoted toward streaming. We missed it. Nothing broke—the system simply hadn’t caught up to reality. The scorecard made no mention of it, and our recommendation was based on yesterday’s worldview.

This isn’t unique to sales or strategy. In finance, a compliance model might keep recommending products even though a regulation changed yesterday. In healthcare, a clinical assistant might surface treatment guidelines that were quietly revised last week. In operations, a supply chain optimizer might propose vendors whose eligibility expired overnight. In each case, the data itself wasn’t corrupt—but the world had moved on.

Evidence from the field:

Exact “staleness rates” for RAG aren’t well established yet, but adjacent evidence shows the stakes. In healthcare, reviews flag timeliness as a core motivation for retrieval and note gaps in up-to-date evaluation datasets [1], while CDS literature documents harm when guidance updates lag [1]. In finance, compliance case studies report errors when policy changes outpace systems [2]. More broadly, industry surveys link weak data readiness to failed AI initiatives [3] and material losses [4]—underscoring that freshness is not a niche issue.

While these studies don’t focus exclusively on RAG, they demonstrate the real-world cost of data staleness. [1-4]

The Framework: Doorman, Ropes, and Clock

So how do you make systems aware of drift? Think of them like a front desk where every question is checked before it’s answered.

-

The Doorman decides which sources are allowed to contribute. Every source has a name, an owner, and a log.

-

The Ropes guide what data is appropriate for a given role, purpose, or region. Labels travel with the data, and if they don’t match, the data doesn’t get used.

-

The Clock ensures that even clean, verified data respects time. Fresh, usable, historical, retired—answers must carry when they were last valid.

Together, these three controls prevent trust from eroding slowly through answers that look fine but are no longer true.

How It Applies Across AI Approaches

It’s easy to assume data freshness is only a Retrieval-Augmented Generation (RAG) concern, since that’s the pattern everyone seems to reach for first. In reality, every AI approach must reckon with the lifetime of its data:

-

RAG (Retrieval-Augmented Generation): Without lifecycle controls, retrieval serves stale context. Variants like CRAG (Corrective RAG), GraphRAG, and hybrid search improve precision, but freshness still depends on governance.

-

ICL (In-Context Learning): Lightweight, but entirely dependent on what you put in the window. If snippets are stale, so are the answers.

-

Knowledge Graphs: Great for relationships and explainability, but graphs drift as entities and edges change.

-

Agentic Models: Can fetch live data, but governance shifts to which tools they can call and whether those tools are current.

-

Fine-Tuning: Bakes knowledge into weights—powerful for stability, terrible for agility. Updates require retraining.

Each approach solves different problems. But none escape the same truth: without lifecycle management, even good data turns into bad answers.

Making It Real: Starting Points

Governance doesn’t need to be a massive platform shift. You can start small and build progressively. This is where a maturity model helps: it shows capability levels in sequence. Most organizations begin with simple labeling, then add time awareness, then receipts (audit trails), and finally automated governance.

Checklist: Where to Start

Label your data at the source (purpose, region, sensitivity).

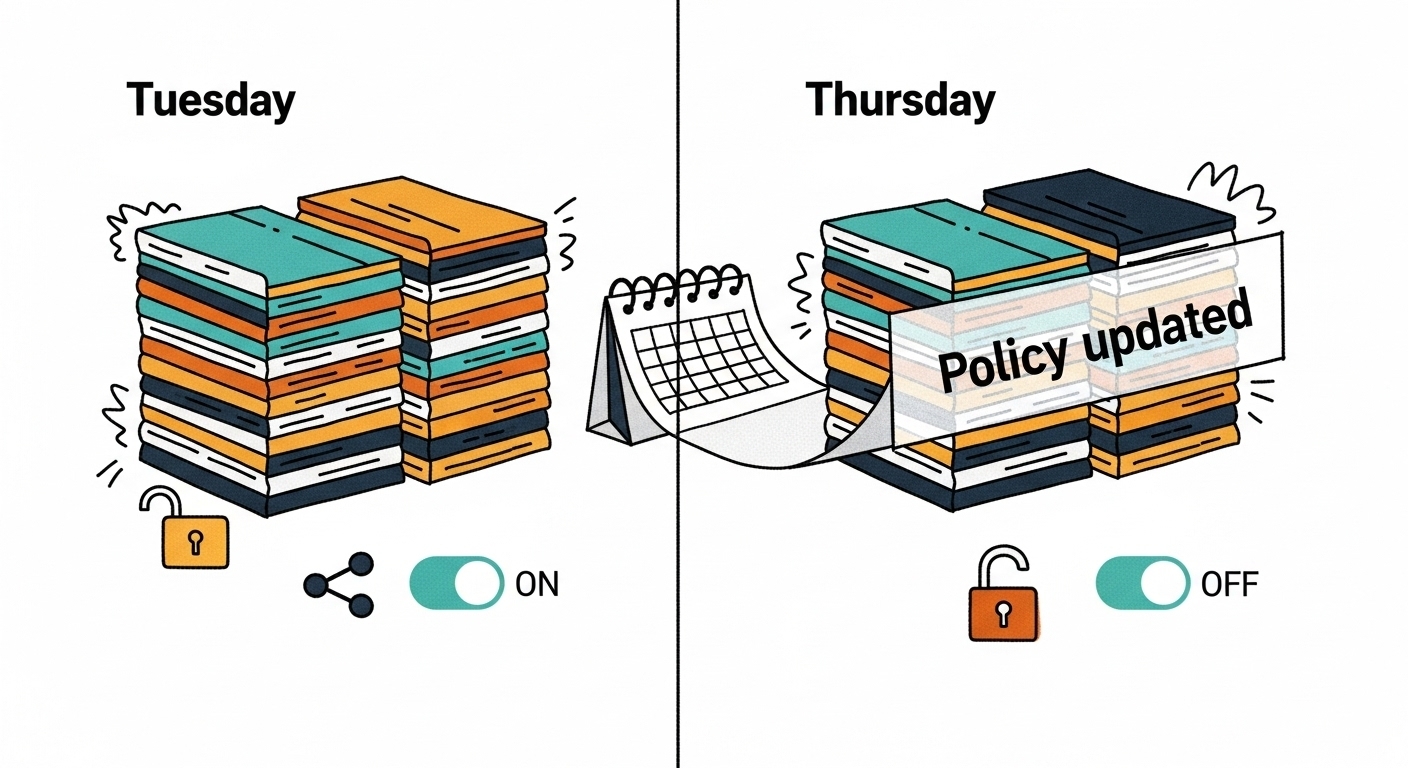

Define time windows (hot, warm, cold, revoked).

Add receipts to every output (sources, when retrieved, why eligible).

Close the loop with nudges and alerts when sources change.

Maturity Model:

Level 1: Labels only

Level 2: Labels + time bands

Level 3: Receipts included in every answer

Level 4: Automated nudges and policy epochs

Concrete examples:

-

Labels: A pricing document might carry

purpose:pricing_decision,region:us-east,sensitivity:internal. Enforcement means unlabeled content simply isn’t eligible for retrieval (“no label, no service”). -

Time bands: Define categories such as Hot (0–7 days), Warm (7–30 days), Cold (30–90 days), and Revoked (expired or invalid). When retrieval runs, only content in allowed bands is considered current.

-

Receipts: A vague record might say “used policy docs.” A good receipt says “used pricing_policy_v6 (valid until 2025-06-30), retrieved 14:22Z, purpose=pricing_decision.” The difference is actionability.

Implementation hints:

-

Start with one data source and one decision flow where staleness causes visible problems (e.g., pricing, claims, product eligibility).

-

Begin with manual labeling before investing in automation—get the categories right first.

-

Expand gradually: labels → time bands → receipts → alerts and automation.

This section bridges the conceptual (Doorman, Ropes, Clock) with the technical (indices, Flink, audit logs). The idea is to give you a pathway: start simple, prove value, then add layers as the risk of drift justifies it.

See the Before/After Walkthrough near the end for a concrete example of the same flow in action.

Costs, Change, and Failure Modes

Key Considerations:

Cost: Metadata, labels, and governance reviews add overhead—but far less than the cost of acting on stale decisions.

Change Management: Governance sticks when teams build habits, not just tooling. Defaults, nudges, and clear ownership matter.

Failure Modes: Governance itself can drift—labels, policies, and audit logs must be reviewed regularly or they risk going stale. For example, compliance labels can become meaningless if regulations change but the labels are never updated, leaving systems confidently applying yesterday’s rules.

For Practitioners: Technical Patterns

graph LR

A[User Question] --> B[Doorman (Confluent mcp-server) ]

B --> C[Flink: Enrich, Mask, Label, Time Bands]

C --> D{Retrieval Layer}

D -->|Canonical Index| E[Governed, Pipeline Data]

D -->|Scratchpad Index| F[Ephemeral Uploads w/ TTL]

E --> G[Model (Claude / Gemini / Azure OpenAI / ChatGPT)]

F --> G

G --> H[Answer + Receipts (Sources, Time, Eligibility)]

Figure: How a question flows through Doorman, Flink, retrieval, the model, and back as an auditable answer.

This section is for those who want to see how the Doorman, Ropes, and Clock framework maps to a real AI architecture. If you’re an engineer or data professional, here’s how the pieces fit together.

Think of the flow like this: a user sends a question → the Doorman approves which tools can respond → Flink enriches and labels the data in motion → retrieval checks canonical and scratchpad indices → the model generates an answer → the system attaches receipts.

Sidebar: Why Two Indices? (example pattern — canonical = curated docs, scratchpad = ad-hoc PDF uploads)

Canonical index: your governed, pipeline-built content. Labeled, time-stamped, and auditable.

Scratchpad index: ad-hoc uploads like a PDF or URL. Temporary, with a time-to-live.

Both enforce the same guardrails before ranking. This way a quick upload never pollutes your trusted corpus, and answers show whether they came from governed data or a scratchpad.

Step 1: Control Plane (the Doorman, powered by Confluent mcp-server)

Confluent’s mcp-server simplifies this role. Instead of standing up your own MCP control plane and manually registering every connector, Confluent provides a server that already knows how to expose topics, connectors, and Flink views as named MCP tools. Each tool comes with a schema, an owner, and audit logs out of the box. That means no side doors—only approved Confluent-managed resources are visible to your LLM. Why it matters: you get the discipline of a Doorman without having to build one yourself.

Step 2: Data Plane (Confluent)

Confluent underpins the real-time fabric. Governed event streams keep context current. Schema Registry enforces schema evolution—so an old field or incompatible payload doesn’t sneak through. Stream Governance applies labels (purpose, region, sensitivity) that travel with the data. Flink processes streams in motion—masking PII, stamping time bands (hot, warm, cold, revoked), and enforcing revocations. Why it matters: you stop drift at the pipeline, not after the fact. In short, Confluent is the substrate that keeps context fresh and labeled so Doorman, Ropes, and Clock can enforce policy in real time—no matter which model sits on top. Confluent is the substrate here, ensuring that Doorman, Ropes, and Clock have fresh and governed data to work with, no matter which model consumes it.

Step 3: Retrieval Layer

Two indices (specialized data stores)—a canonical index and a scratchpad index—filter by labels and time bands before any semantic ranking. Why it matters: the model only ever sees data that is both current and eligible.

Step 4: Audit & Receipts

Every answer includes the sources used, when they were retrieved, and why they were eligible. Example: “12% discount; sources: pricing_policy_v6, contract_acme_2025; retrieved at 14:22Z; purpose: pricing_decision.” Why it matters: stakeholders can trust not only the answer but also its lineage.

Provider Notes (reference pattern):

Of course, the Doorman, Ropes, and Clock need a data substrate they can trust. This is where Confluent’s mcp-server comes in—acting as the Doorman itself. It registers only approved Confluent connectors, topics, and Flink views as named tools, with Schema Registry, Stream Governance, and Flink enforcing freshness and policy. Regardless of whether you use Claude, Gemini, Azure OpenAI, or ChatGPT, they always interact through Confluent’s governed doors.

These notes then map the framework to current major AI providers. The goal is to show how the Doorman, Ropes, and Clock idea can be realized in practice across different ecosystems:

-

Anthropic (Claude): supports MCP tool use natively. Registering with Confluent’s mcp-server means Claude only sees approved Confluent-managed connectors (e.g., policy search or curated docs), with logs and ownership intact.

-

Google Gemini: supports function calling/grounding. Through Confluent’s mcp-server, Gemini receives only pre-labeled, policy-compliant data streams. Stream Governance ensures Gemini never consumes data outside its allowed scope.

-

Azure OpenAI: integrates with On-Your-Data RAG. Confluent’s mcp-server governs which sources are exposed, while Schema Registry and Flink enforce schema and freshness before retrieval runs. For example, you might expose a Confluent topic carrying

pricing_policydata through Schema Registry with versioning, and let a Flink job apply time bands before Azure retrieves it. -

OpenAI (ChatGPT/Assistants): lets you register custom tools. When paired with Confluent’s mcp-server, every tool call is approved and every response respects labels, time bands, and audit trails. For instance, OpenAI functions can be wired only to Confluent-managed APIs or views, so retrieval always reflects fresh, schema-checked data.

Together, these examples show that while each model provider has its own tooling, Confluent provides the common governance and freshness layer underneath them all—ensuring that Doorman, Ropes, and Clock work reliably regardless of which LLM you choose. Confluent’s own blog Powering AI Agents with Real‑Time Data Using Anthropic’s MCP and Confluent illustrates this in practice, showing how the mcp-server integrates topics, Flink SQL, connectors, and governance into MCP tools. (see Reference [5])

How This Relates to Confluent’s MCP Blog

Confluent’s blog post Powering AI Agents with Real-Time Data Using Anthropic’s MCP and Confluent shows how the Confluent mcp-server wires topics, Flink SQL, connectors, and governance into MCP tools. It’s a detailed look at the machinery behind real-time context for AI agents.

This article builds on that foundation. The Doorman, Ropes, and Clock framework gives leaders and practitioners a mental model for data lifecycle management: how to spot drift, enforce eligibility, and carry receipts. The maturity model, checklists, and cross-industry examples extend the MCP narrative beyond wiring to how organizations can adopt these practices step by step.

Use the Confluent post to see how the wiring works. Use this article to understand why drift matters and what you can do about it.

Request Envelope Example

Here’s what a request envelope might look like when calling the system API. It carries identity, purpose, and time requirements so the Doorman, Ropes, and Clock can enforce governance:

{

"user_id": "pm-417",

"role": "pm",

"tenant": "acme",

"purpose": "pricing_decision",

"must_have_labels": ["tenant:acme", "purpose:pricing_decision"],

"time_band": "hot"

}

Figure: Example request envelope. The Doorman uses role and tenant to admit tools, the Ropes enforce purpose and labels, and the Clock respects the time_band. Indices come into play later: canonical = curated docs, scratchpad = ad-hoc uploads.

This bridges the architecture with real usage: every call makes its intent explicit, and the system enforces eligibility before retrieval.

Closing Thought

Even good data can turn bad. The difference is whether your system notices—and whether you do anything about it.

Put a Doorman in front of your data. Add Ropes that shape what’s allowed. And let the Clock do what it’s meant to do: remind you what still counts as now.

Before/After Walkthrough:

graph TB

subgraph Before

A[PM asks for discount] --> B[System pulls unlabeled docs]

B --> C[Includes outdated policy]

C --> D[Answer looks fine but is invalid]

end

subgraph After

A2[PM asks for discount] --> B2[Doorman admits pricing API + policy]

B2 --> C2[Ropes enforce tenant + purpose]

C2 --> D2[Clock filters by hot/warm content]

D2 --> E2[Answer: 12% discount; sources: pricing_policy_v6, contract_acme_2025; retrieved 14:22Z]

end

Figure: Before, the system uses unlabeled/outdated content. After, Doorman, Ropes, and Clock enforce eligibility, timeliness, and scope, producing a current and auditable answer.

References

-

[Healthcare] BMC Health Services Research, 2022. Challenges to implementing artificial intelligence in healthcare. Link

-

[Finance] VKTR, 2024. 5 AI Case Studies in Finance. Link

-

[Cross-industry] Informatica, 2024. The surprising reason most AI projects fail and how to avoid it. Link

-

[Cross-industry] Fivetran, 2023. Poor data quality leads to $406M in losses. Link

-

[Confluent] Confluent, 2024. Powering AI Agents with Real-Time Data Using Anthropic’s MCP and Confluent. Link

Write a comment